Unlocking the business value from AI in Pharma

7 min read 16 June 2025

Artificial Intelligence (AI) holds tremendous promise across the pharmaceutical and life sciences industry. Estimates suggest that, if scaled effectively, AI could generate up to $254 billion in additional annual operating profits for pharma companies globally by 20301. From accelerating drug discovery to enhancing patient recruitment, streamlining regulatory documentation and approvals, and enabling tailored communications with both healthcare professionals and patients, AI is positioned to fundamentally transform the pharma value chain.

Yet despite billions invested and numerous successful pilots, organisations continue to struggle with scaling AI to unlock its full business value.

Why is realising this value so difficult?

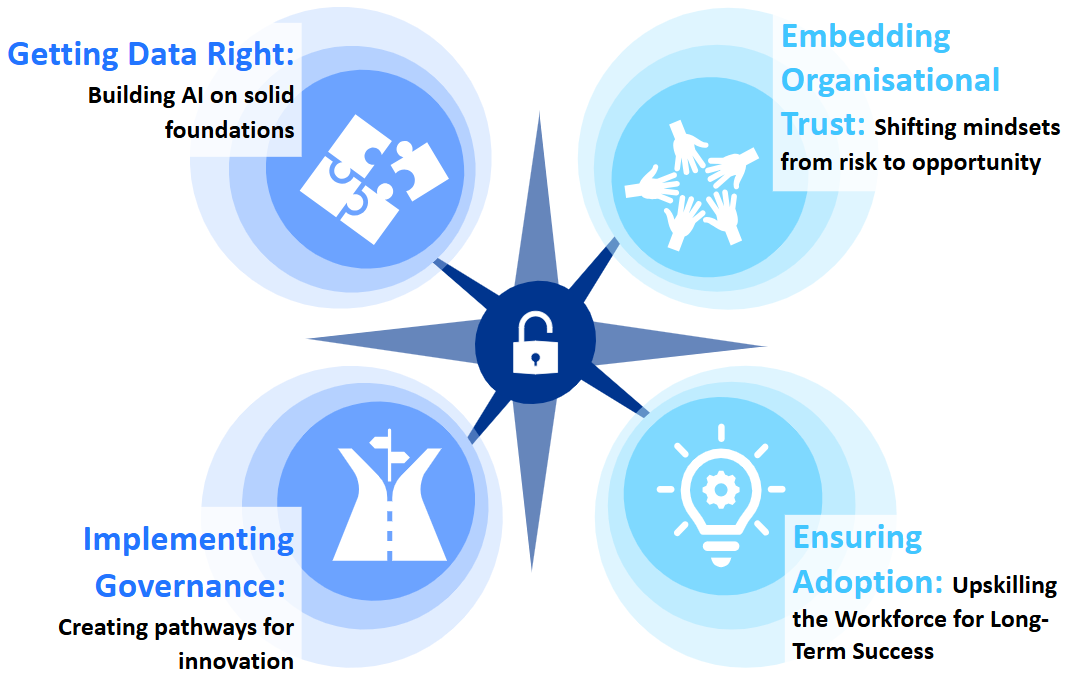

Drawing on our experience, we explore four areas where life sciences companies frequently fall short – and outline the critical enablers to achieving both scale and value from AI investments.

Click to enlarge image

Getting data right: building AI on solid foundations

AI’s effectiveness is only as strong as the data it's built upon and across the pharma industry, data maturity remains a persistent blocker.

High-impact AI use cases in life sciences often require integrating multi-modal data types (e.g omics, imaging, Electronic Health Records, Case Report Forms, lab data), spanning multiple functions from early discovery to real-world evidence. These datasets are frequently siloed, governed inconsistently, or formatted for primary rather than secondary use. As a result, data scientists commonly spend up to 80%2 of their time cleaning and wrangling data before they can even begin model development. We have seen leading companies spend over two years resolving data quality issues before any real AI value was realised.

Incorporating data maturity assessments early in AI programme planning is critical. Frameworks such as FAIR (Findable, Accessible, Interoperable, Reusable) and ALCOA++ (Attributable, Legible, Contemporaneous, Original, Accurate, etc.) offer a robust method for evaluating dataset readiness, aligning expectations, and identifying the level of effort required for the data to be useable.

Key get-rights:

- Include data availability and maturity evaluation as part of the AI use case scoping process

- Apply data frameworks (FAIR, ALCOA++) when prioritising AI use cases to highlight systemic data issues

- Quantify and incorporate data readiness effort into use case planning, including data cleaning, integration, and validation activities, to ensure accurate resourcing and realistic timelines

| Supporting a global pharma to unlock AI value through data governance: We worked with a major biopharma organisation to implement a cross-functional data governance model aligned to FAIR principles. By mapping ownership and embedding standards into project planning, we enabled faster alignment across teams and accelerated use case development for clinical and commercial applications. |

Implementing governance: creating pathways for innovation

In a highly regulated industry like pharma, a robust and structured governance model is critical not just for compliance—but as a key enabler of AI innovation.

Too often, we see promising AI initiatives stall due to the absence of a clear governance process that supports the full lifecycle of AI use cases—from idea generation and review to piloting and business-wide deployment. Without this structure, organisations face repeated challenges in raising new AI ideas, securing approvals, or scaling solutions that have proven value. Concerns about model transparency and auditability can amplify hesitancy from the business in pushing AI use cases into production.

A Gartner survey of life science executives (June 2024)3 found that while 92% had at least one use case in pilot, only 30% had scaled more than six, and over half had abandoned an initiative due to budget constraints, highlighting the need for prioritised governance that maximises resource allocation.

Governance should be embedded from the outset, supported by cross-functional bodies that connect business needs with technical oversight, and designed to promote both responsible use and rapid scaling.

Key get-rights:

- Establish an end-to-end governance model enabling use case generation, review, testing, and deployment and ensuring ideas from across the business can be raised and actioned effectively

- Define clear approval criteria and escalation pathways aligned to regulatory standards, risk tolerance, and business priorities to ensure timely and confident decision-making

- Embed explainability, auditability, and accountability into model development to build trust, transparency, compliance, and support enterprise-wide adoption

| Establishing AI governance within a regulated environment: We partnered with a government agency to define and operationalise a consumer facing process, building out a tailored, machine learning enabled to improve efficiency and overall customer experience. Delivery required empowering cross-functional decision-making and ensuring robust, transparent oversight including technical review boards, ethical risk assessments, and documentation standards tailored for a regulated environment. |

Embedding organisational trust: shifting mindsets from risk to opportunity

Scaling AI is not just a technical challenge – it’s a cultural one.

In many pharma companies, a cautious approach to new technologies is deeply ingrained, driven by risk aversion, compliance requirements, and an uncompromising focus on patient safety. While these priorities are non-negotiable, they often create internal confusion around the legitimacy or appropriateness of using AI tools in day-to-day work. This hesitancy is echoed in wider sentiment: in 2024, 42% of UK respondents expressed concern about AI, describing its reasoning and decision-making processes as “unknowable.” 4

Leadership plays a pivotal role in reshaping this mindset.

Senior leaders must model the behaviour they want to see, sharing openly how they themselves are experimenting with AI, using company-approved tools, and understanding both the opportunities and limits. Creating safe spaces for experimentation – where employees are encouraged to test and learn – builds momentum and trust.

Key get-rights:

- Encourage leaders to role-model AI usage and openly communicate their own learning journeys

- Ensure access to approved tools at all levels to create opportunities for experimentation hands on

- Create psychological safe environment for employees to experiment with AI applications in their work and celebrate wins and positive outcomes

| Building trust in AI at scale in a global pharma: We supported a global pharma client in running immersive AI leadership sessions, enabling senior leaders to explore GenAI tools hands-on. The sessions where followed by a reverse mentoring program where employee experts within respective teams were paired to train and be a point contact for their peers and leaders. By demystifying AI and equipping teams with the knowledge to speak confidently and transparently, we catalysed broader cultural change across their business functions. |

Driving adoption: upskilling the workforce for long-term success

For AI to become embedded in how pharma operates, workforce capability must catch up with ambition.

Our clients regularly cite the need to reskill teams in data literacy, digital fluency, and AI adoption. In a recent Baringa led survey almost two-thirds of all respondents would like to see more education on AI in workplaces5. However, internal training often lags behind, limited by time, funding, or fear of failure. Upskilling individuals must be positioned as a core enabler of AI-driven transformation.

We recommend starting with team leaders, equipping them to champion AI adoption. Creating time and capacity to cascade learning within teams through immersive learning experiences that allow employees to engage with AI directly with real hands on scenarios. These sessions must create a safe environment where individuals feel empowered to ask questions, test ideas, and build their skills—fostering both technical capability and trust in digital technologies.

Key get-rights:

- Focus learning curricula on use cases that align with existing approved tools where possible and governance to ensure immediate relevance and application

- Prioritise experiential over theoretical sessions utilising interactive formats that allow employees to engage directly with AI tools in safe, supportive environments

- Look to build digital literacy into individual objectives, development plans, and recognition system as a core competency

| Developing an AI-literate workforce for a global pharma: We worked with a global pharma to design and deliver “AI bootcamps” development program to over 300 employees across R&D data scientist, scientists and clinicians. These created space for hands-on learning, embedded new mindsets and behaviours, and led to a measurable uplift in employee confidence and capability. |

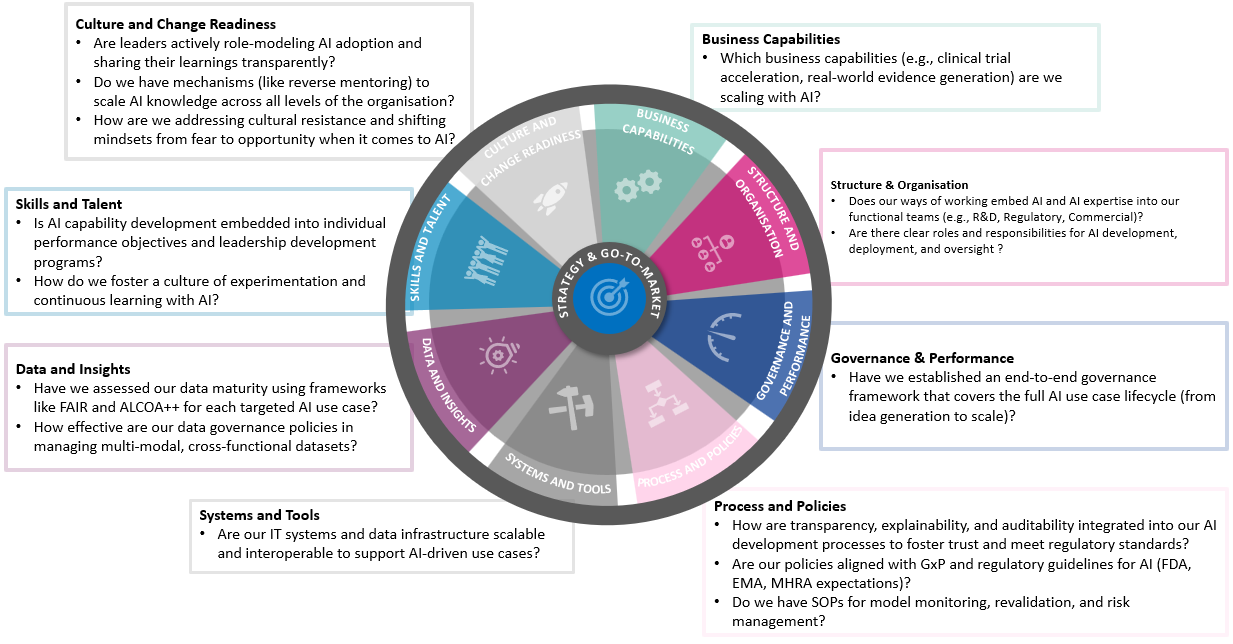

Targeted AI readiness: prioritising efforts for scalable impact

In all of the use cases above, we have leveraged our AI readiness model to understand where each organisation needed to focus effort as a priority. The Baringa AI readiness assessment offers a comprehensive evaluation across the operating model of preparedness to embed and scale AI — laying the foundation for confident, strategic action. Not every area will be equally relevant — the right focus depends on the specific priorities and goals of your teams.

Click to enlarge image

Baringa brings together deep expertise in pharma and life sciences with strong technical capabilities across data and analytics, people-led change, technology-enabled business transformation, and learning and development.

Whether you’re launching your first AI pilots or embedding AI into core operations, we can help you turn ambition into measurable, business wide impact.

Get in touch with our team to find out more Kate Moss, Laveshni Reddy, Chris Maxted or David Logue.

References:

- Re-inventing Pharma with artificial intelligence, Strategy& https://www.strategyand.pwc.com/de/en/industries/pharma-life-sciences/re-inventing-pharma-with-artificial-intelligence.html

- The Value of Data Catalogs for Data Scientists, Enterprise Knowledge https://enterprise-knowledge.com/the-value-of-data-catalogs-for-data-scientists/?utm_source=chatgpt.com

- 2Q24 LLMs and Generative AI: Healthcare Provider Benchmarks and Trends, Gartner https://www.gartner.com/en/documents/5635391

- TRUTH: people value what they know most - other people, Baringa

https://www.baringa.com/en/insights/balancing-human-tech-ai/truth/ - Education truly empowers us as consumers and society, and is a big missing piece of the current puzzle, Baringa

https://www.baringa.com/en/insights/balancing-human-tech-ai/control/

Our Experts

Related Insights

Transforming customer service with AI

Integrated across service and operations, AI drives growth and cuts cost to serve. Resulting in better outcomes for customers and employees, the gains are proven. Why wait?

Read more

Getting value from AI: a practical guide for business leaders

Discover how to overcome uncertainty and scale AI responsibly. Download Baringa’s practical guide for business leaders to identify priority use cases, drive change, and deliver measurable outcomes.

Read more

Is everyone asking you about AI?

AI is everywhere — and the questions are coming thick and fast. From boardroom pressure to competitive urgency, organisations are being asked to define their AI strategy, prove ROI, and deliver results. Let's talk.

Read more

From funding to fulfilment: building confidence in public sector digital and AI transformation

Digital promises better outcomes, but many efforts fall short. How can public leaders ensure AI programs are set up for success and lasting impact?

Read moreIs digital and AI delivering what your business needs?

Digital and AI can solve your toughest challenges and elevate your business performance. But success isn’t always straightforward. Where can you unlock opportunity? And what does it take to set the foundation for lasting success?