Responsible AI: shaping the modern world and its implications for governments

3 November 2023

In today’s fast-paced world, artificial intelligence (AI) has emerged as a transformative force with the potential to revolutionise every sector of society. However, as AI’s influence expands, it becomes imperative to prioritise responsible AI practices. Responsible AI refers to the ethical and accountable development, deployment, and use of AI systems. We explore the importance of responsible AI in the modern world and delve into its implications for governments.

In the spirit of the topic, we asked ChatGPT (using the OpenAI publicly available application) to give us some thoughts on the topic – which we will then critique with a Baringa view! And yes, ChatGPT wrote that introduction, too…

The opportunity AI presents for governments: efficiency and citizen experience

ChatGPT: AI offers UK government organizations the potential to streamline processes, enhance efficiency, and improve citizen experiences. Here's how AI can help:

- Automation and Administration: AI automates administrative tasks, saving time and resources. Chatbots handle common queries, improving citizen interactions.

- Data Analysis and Insights: AI analyses large amounts of data, providing actionable insights for evidence-based decision-making.

- Enhanced Citizen Services: AI improves citizen experiences by offering personalised recommendations and user-friendly interfaces for accessing government services.

- Fraud Detection and Security: AI detects fraudulent activities through advanced algorithms, protecting public funds and maintaining program integrity.

By embracing responsible AI practices, UK government organizations can drive efficiency, deliver better services, and improve citizen experiences while ensuring privacy, addressing biases, and maintaining transparency and accountability.

Baringa: As you might expect for a technology that has grabbed the attention of so much of the media, government, business and the public, this is a strong answer.

The UK government, like governments around the world, faces the ongoing challenge of maximising efficiency while allocating limited resources to high-value tasks that require human interaction. To address this, AI can play a significant role in helping governments run more efficient and effective services. By automating routine administrative tasks and implementing AI-powered solutions like chatbots, governments can save valuable time and resources, allowing employees to focus on more critical tasks.

Furthermore, AI can improve citizens' everyday experiences by offering personalised recommendations, intuitive interfaces, and streamlined access to government services. Through the responsible use of AI, governments can optimise operations and deliver improved services that meet the evolving needs of their citizens.

ChatGPT’s answer is also indicative of its capabilities. The response it generated is positive but mild compared to the current hype surrounding generative AI. Generative AI is rapidly evolving from being a supportive content creation tool to being a skilled digital assistant capable of handling a huge array of tasks, from meeting transcription and note-taking to presentation design and data analysis (and even poetry!) – likely one that humans will become increasingly used to.

The responses of generative AI tools is a response to a ‘user prompt’ (what you ask it to do), but is heavily dependent on the ‘system prompt’ (hidden context that it considers before responding). This may be what is leading to a more restrained response – a ‘system prompt’ could, for example, stipulate that responses should offer only positive views of artificial intelligence. System prompts can be configured in custom AI solutions but are hidden in proprietary AI services like ChatGPT – and governments must be mindful of this when procuring AI services.

To ensure the responsible use of AI, governments will need to focus on education on AI capabilities and configuration, along with raising awareness of the potential for AI to introduce or reinforce biases. We’ve outlined a broader view of a responsible AI framework below.

The importance of Responsible AI

ChatGPT: Responsible AI is crucial for maximizing benefits and minimizing harm. It involves fairness, transparency, accountability, and privacy protection. Fairness ensures unbiased outcomes, transparency fosters trust and understanding, accountability holds users and developers responsible, and privacy protection safeguards personal information. Responsible AI also promotes ethical data treatment, adhering to privacy regulations and user consent.

Baringa: Our view on this certainly comprises all the elements ChatGPT cites here, but we’d add that Responsible AI practices can ensure there’s no autonomous harm, and ultimately, that AI is good for society.

Whilst AI presents a significant opportunity for governments, it also introduces significant new risks. As governments deploy AI to increasingly important areas of citizens’ lives in areas like healthcare, international travel, policing, and welfare systems, the potential for, and scale of, inadvertent direct or indirect harm grows. Even a minor flaw in these AI applications could cause individuals harm and potentially limit their means of recourse. Generative AI is still liable to ‘hallucinate’, generating credible sounding falsehoods and inaccuracies. In at least one publicised instance ChatGPT created fictitious previous court cases which were then presented as facts by lawyers. It’s of utmost importance that governments strike the right balance between safeguarding against such risks, protecting personal data and fostering innovation in the public, private, and third sectors. By providing accessible data assets that connect disparate datasets, government can play a crucial role in supporting the development of AI applications.

For multiple reasons, responsible AI is far more critical and challenging for the Public compared to the Private sector. Public sector AI projects are likely to use citizens’ most sensitive personal information to inform decisions with the potential for the greatest impact on their lives. Adding to the challenge is the need to ensure that datasets are universally accessible and consistently applied across an ever more diverse society.

While many frameworks have been published to support Responsible AI, many are very high level and lack practical advice. We believe that a robust framework should focus on the following areas: Social good; Robustness and safety; Interpretability; Accountability; No autonomous harm; Research-led; Privacy; No discrimination; and Impact.

Implementing Responsible AI

ChatGPT: Organizations implement Responsible AI by following these key steps:

- Ethical Framework: They establish an ethical framework that aligns AI practices with societal values, human rights, and legal requirements.

- Data Governance: They implement robust data governance practices, ensuring data privacy, protection, and minimizing biases in training datasets.

- Transparency and Explainability: They strive for transparency by providing clear explanations of AI algorithms, decision-making processes, and potential limitations to build trust with users.

- Continuous Monitoring: They monitor AI systems for unintended consequences, biases, and potential risks, taking prompt corrective actions as needed.

- User Engagement: They actively involve users and stakeholders in the design, development, and deployment of AI systems, incorporating diverse perspectives and feedback.

Baringa: We don’t disagree with ChatGPT here…

When implementing AI applications, whether customer or citizen facing or to drive improved organisational efficiency, you need to:

- Know the scope of your AI usage

- Know it’s working and doing what you intend it to

- Make sure it doesn’t discriminate or negatively impact your organisation

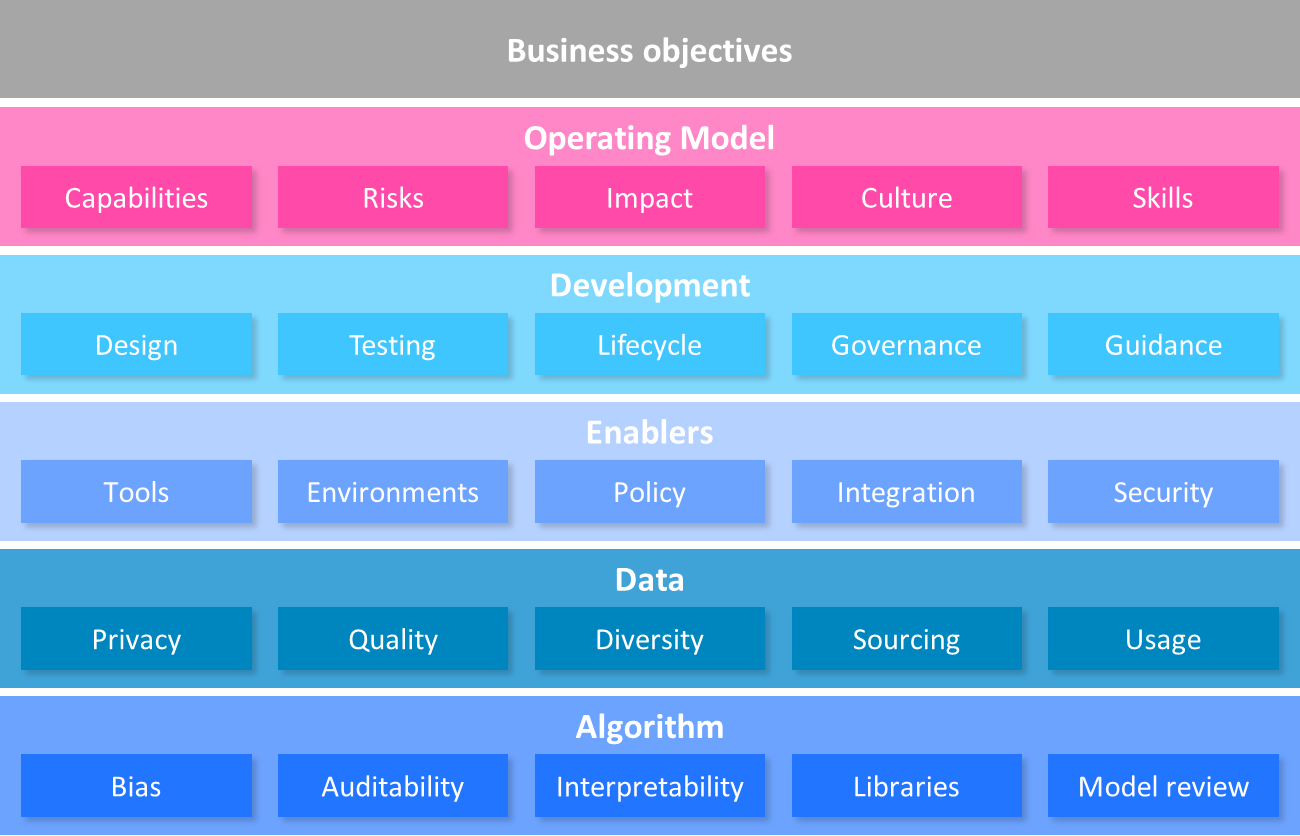

Our approach to implementing Responsible AI prioritises delivering accountability and transparency over controlled and governed AI applications, focusing on:

- Operating Model: Ensuring that capabilities, risk management, impact monitoring, culture and social benefit are baked into the operating model and incentive structures.

- Development: Ensuring that application design, testing and deployment is actively monitored, with clear guidance and documentation, supported by robust governance.

- Technology: Ensuring that tools, environments, data stores and integration tools support the Responsible AI principles.

- Data: Ensuring that model training uses diverse data to minimise the potential for discrimination, that data sourcing and usage is clear, and that data is of a sufficient quality to drive effective outcomes.

- Algorithm: Ensuring algorithms are unbiased (or inherent bias is clear, understood and mitigated), interpretable and auditable to eliminate ‘black box’ decisions.

These policies should apply while developing custom AI solutions, using AI embedded in other solutions or using prebuilt AI services. It’s harder to explain the Data used and the potential for algorithm bias for third-party proprietary solutions, but these areas still need equal consideration for any AI used in a production setting.

Implications for Governments

ChatGPT: Governments play a critical role in shaping the development and deployment of AI systems. They must establish regulatory frameworks and policies for responsible AI. This includes legal, ethical, and technical considerations to address risks and guide implementation.

- Policy and Regulation: Comprehensive regulations balance innovation and accountability, covering data privacy, transparency, bias, and cybersecurity.

- Collaborative Efforts: Engaging experts, researchers, and civil society shapes responsible AI standards, ensuring a holistic approach to address challenges and implications.

- Ethical AI Procurement: Governments influence the market by demanding ethical solutions during procurement, promoting responsible practices among vendors and developers.

- Education and Awareness: Investing in AI education equips citizens, policymakers, and public servants with knowledge and skills for ethical, social, and legal aspects. Raising awareness fosters an informed citizenry and encourages public engagement in shaping AI policies.

Governments must proactively establish regulations, foster collaborations, prioritize ethical procurement, and invest in education. This ensures responsible AI use, maximizing benefits while minimizing risks.

Baringa: That’s a fairly comprehensive summary…

Governments worldwide are adopting different approaches to AI regulation. The UK is exploring a pro-innovation approach and has published guidance for civil servants' use of generative AI, while the US is considering the Algorithmic Accountability Act and an AI Bill of Rights. China has already implemented algorithm and transparency regulations, particularly for generative AI, and is looking to strengthen them further. On the other hand, countries like India are taking a more hands-off approach. However, as seen with previous technological advancements, international cooperation and alignment on regulation will be crucial.

In addition to setting regulations and procurement of AI services, it’s vital for governments to lead by example in their use of AI tools. Given the potential impact on citizens, responsible AI governance should underpin government's procurement, development, and deployment of AI applications.

We believe that governments will need to be agile and responsive in this fast-evolving area. Small, rapid pieces of guidance and regulation that are easy for organisations to adopt, and easy for citizens to understand, are likely to be most effective. Governments face great pressure to innovate in a responsible way and are held to higher standards than the private sector.

The inherent complexity of AI technology in comparison to other significant tech advances makes AI particularly difficult for governments to manage. Here are a few initiatives we think governments should consider:

- Guidance for the public on how to use easily accessible AI services.

- Standard ways to explain how an AI solution works; Google’s model cards are a step in the right direction here.

- How to respond to the complex and fast-moving (sometimes daily) advances in AI.

There is one key omission from ChatGPT, which is the sustainability and environmental implications for the growing use of large scale AI deployments. Running ChatGPT, and other similar technologies, consumes significant amounts of water, and uses considerable amounts of energy (with the associated carbon emissions). Even the use of search engines is having a growing environmental impact due to the increasing complexity of the AI underpinning the results. These considerations must form an important consideration for governments looking to balance technological innovation with NetZero objectives.

Conclusion

Baringa: Harnessing AI can empower the UK government to automate tasks, analyse data, enhance citizen services, and ultimately improve efficiency and citizens’ lives. Ensuring this is done responsibly is as great a challenge as delivering the applications themselves – and it deserves as much consideration. Laying the foundations of Responsible AI now, given how fast AI is developing and proliferating, should be a priority for the Government and all organisations in the UK and internationally.

To find out more about responsible AI and data ethics in government, please get in touch with Callum Sparrowhawk, Adam Brocklesby or Nathan Burns.

Our Experts

Related Insights

Laying the foundation: Preparing government procurement for AI integration

What foundational steps must government agencies take today to ensure AI can truly transform procurement tomorrow?

Read more

UK Spending review 2025 – impact and challenges for the public sector

Explore the 2025 UK Spending Review’s impact on public services, tech investment, and the challenges facing government departments.

Read more

The National Data Library: building the foundations of the UK’s data infrastructure

The ambition of the National Data Library is to lay the foundations of the UK’s future data infrastructure. To do this, the NDL needs to consolidate the current fragmented ecosystems and to put forward a clear case of its benefits for the UK economy and society.

Read more

Learning from crisis: How to embed mission-led government

Now that the budget has set the ‘what’ of government, we encourage focus to shift to the ‘how’.

Read moreRelated Client Stories

Improving data preparation and collection for better State Government decisions

How do you collect and translate inconsistent data from disparate systems to better inform and evaluate the impact of spend policies?

Read more

Putting a social outcomes lens over an IT RFP for an Australian government agency

How do you change how vendors see their systems, so responses align with agency outcomes and non-functional requirements?

Read more

Ensuring climate compliance and lasting impact for government agencies

How do government agencies navigate climate regulations today and future-proof for tomorrow?

Read more

Delivering NHS England’s most complex non-clinical technology procurement ever

Supporting NHS England build the data backbone that will unlock better health services and care for people in England for years to come.

Read moreIs digital and AI delivering what your business needs?

Digital and AI can solve your toughest challenges and elevate your business performance. But success isn’t always straightforward. Where can you unlock opportunity? And what does it take to set the foundation for lasting success?