From buzzwords to business value: making AI work for your organisation

7 min read 1 February 2025

Artificial Intelligence (AI) has become a household term – and for many, a source of fatigue. Often heralded as the next big “game changer,” it still feels abstract to professionals across industries. Leaders continue to ask the same fundamental question: “Where does AI actually deliver value for my organisation?”.

AI takes many forms, from chatbots to data models to the code that powers them. Regardless of its application, AI should be approached like any other business investment: strategically planned, thoughtfully implemented, and measured by its ability to drive tangible outcomes. Certain types of AI models, particularly generative and black-box models, carry unique and material risks, including issues around explainability, bias, and control. These risks require active management and clear governance to ensure safe and compliant deployment. At Baringa, we believe the real value of AI lies in cutting through the hype to focus on what matters: identifying high-impact use cases, exploring pragmatic entry points, and aligning solutions with specific business needs. Success comes not from chasing trends, but from making deliberate, outcome-focused decisions that unlock long-term value.

Click to enlarge image.

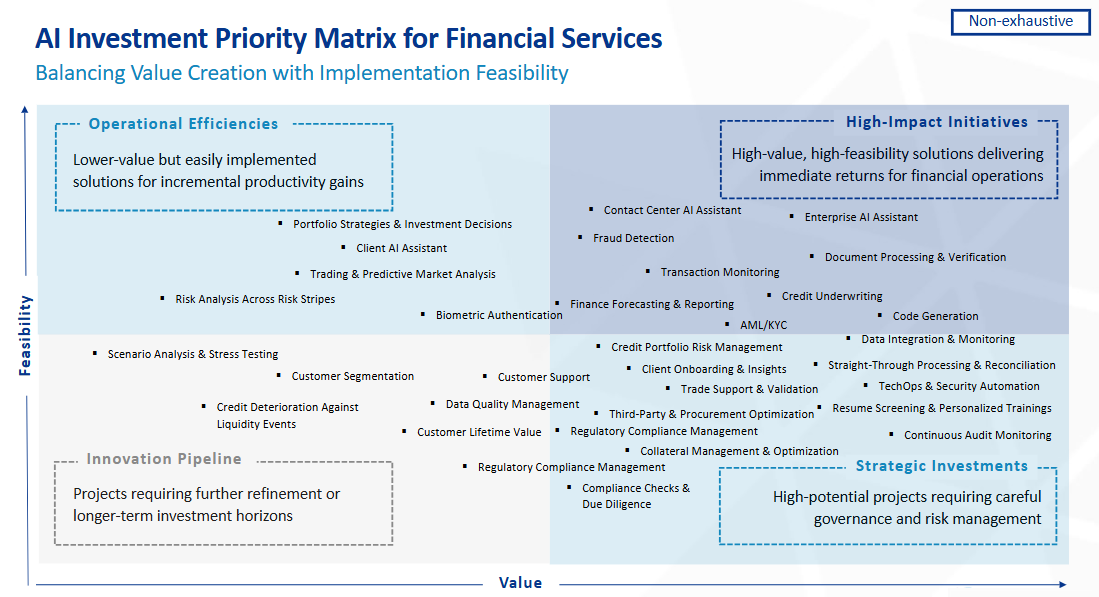

The AI priority matrix

AI is not a one-size-fits-all solution. What works for one firm may be ineffective for another. Before committing budget and resources, organisations must first assess where AI can generate meaningful value - and whether the investment required is feasible. That means defining the specific business problems AI is intended to solve and determining which approaches are best suited to address them.

One way to prioritise this decision-making process is by leveraging an investment matrix comprised of value and feasibility. The value axis considers whether a use case will drive cost savings, efficiency, revenue growth, or other measurable impact. The feasibility axis evaluates whether the organisation has the data quality, technical foundation, and operational readiness to support implementation. While this prioritisation model may look familiar, it reflects how financial services clients most effectively organise and act on AI opportunities.

This framework helps organisations prioritise AI opportunities across four categories:

- High-Impact Initiatives - Solutions with both high value and high feasibility that deliver immediate returns for financial operations.

- Strategic Investments - High-potential projects requiring strong governance and risk management.

- Operational Efficiencies - Lower-value, easy-to-implement use cases that yield incremental productivity gains.

- Innovation Pipeline - Emerging solutions that require further refinement or longer-term investments.

Often, the strongest starting point lies in initiatives that combine high value with high feasibility - solutions primed to generate quick, measurable wins across financial operations.

Spotlight use cases – where AI is delivering real results

There is no shortage of possibilities when it comes to AI, but certain use cases consistently rise to the top. These examples stand out because they are valuable, feasible, and repeatable, making them strong candidates for early adoption:

Contact Centre AI Assistants reduce wait times, improve customer satisfaction, and enable 24/7 support without adding headcount. They’re also relatively easy to pilot using existing call logs and FAQs, offering fast return on investment with minimal disruption.

Document Processing and Verification automates data extraction from contracts, invoices, and forms - eliminating tedious manual reviews prone to error. This is particularly useful for onboarding, compliance, and operations teams managing high document volumes.

Code Generation tools accelerate development by automating boilerplate code, reducing bugs, and speeding up delivery timelines. With proper human oversight, they allow engineering teams to focus on more complex, high-impact tasks.

Fraud Detection systems monitor transactions in real time, identifying suspicious activity within large datasets without disrupting legitimate behaviour. When combined with behavioural or biometric signals, these tools offer strong protection in high-volume, high-risk environments.

Credit Underwriting is also gaining traction as a next frontier for AI, especially among lenders seeking to modernise decisioning models without compromising on fairness or transparency. AI tools now evaluate non-traditional data, such as cash flow, payment history, and behavioural patterns, to assess creditworthiness in thin-file applicants. However, as underwriting decisions carry significant legal and ethical weight, they fall under strict regulatory scrutiny.

Guidelines like NYDFS Circular Letter No. 7 are pushing firms to rethink how they govern and explain automated decisions. Institutions are expected to demonstrate model transparency, validation rigour, and risk oversight across the full lifecycle of an AI tool. That’s why underwriting is not just a promising use case - it’s a proving ground for responsible AI. Many organisations are turning to structured frameworks and accelerators to meet these expectations without slowing innovation.

Low-friction, high-impact use cases are emerging as ideal entry points, offering real returns without requiring major architectural overhauls. AI tools in this category are helping institutions improve service, boost efficiency, and reduce operational strain.

Quick wins – low lift, high learning

Not every organisation is ready to pursue large-scale AI, and that is both common and entirely valid. For teams that may not yet have the budget, centralised and curated data, or alignment on regulatory and privacy requirements (such as data protection and anonymisation), starting small can still create real momentum. A measured approach allows organisations to demonstrate impact, build internal trust, and lay a solid foundation for broader AI adoption while continuing to drive efficiency and strategic priorities.

These tactical use cases offer real value because they rely on internal data already available within the organisation - such as call logs, transaction records, and historical documents - making them easy to implement. Using familiar internal data sources as a first step allows firms to implement AI quickly and effectively while building trust and momentum across the organisation.

Customer Segmentation models, for example, can predict which clients are likely to stay, churn, or shift deposits. These insights enable institutions to take targeted, proactive steps - like offering tailored incentives or personalised outreach - to retain at-risk customers. This approach strengthens loyalty, prioritises support where it is needed most, and helps optimise limited marketing resources without requiring a full experience overhaul.

Data Quality Management is another strong entry point. AI can detect duplicates, flag inconsistencies, and uncover missing values in large datasets to improve reporting accuracy and streamline manual cleanup. For firms operating in highly regulated environments, improved data quality also supports compliance with supervisory expectations around data lineage, reporting integrity, and auditability.

The AI checklist – scale with foundation

Before scaling or even launching an AI use case, organisations should ask a critical question: “Are we truly ready?”

Readiness goes far beyond technology. It requires engaging the right stakeholders, defining clear principles and risk thresholds, aligning with trusted frameworks, and embedding AI efforts into existing governance and compliance structures.

Every use case should tie directly to the business strategy, build on established data governance practices, and reflect a nuanced understanding of the evolving and often fragmented regulatory landscape. Equally important, a strong communication strategy is essential to foster transparency, build trust, and help teams understand what AI is and what it is not intended to do.

AI Checklist – Maximize Benefits, Compromise Nothing

Empowering people in the age of AI

AI’s greatest potential lies not just in processing power, but in partnership. At Baringa, we believe every successful AI journey starts with people, not platforms. For employees, the rise of AI can feel threatening - sparking real fears about being automated out of a role. However, when implemented with care, AI unlocks capacity, not replacement. It removes low-value, repetitive work so people can focus on high-value thinking that drives impact. As Co-Intelligence author Ethan Mollick puts it, “AI is your co-worker.” But to make that a reality, companies must build a culture of psychological safety - where teams are encouraged to explore, question, and even fail in pursuit of smarter ways of working. The most scalable AI innovations often start quietly, from the ground up. We don’t just implement AI - we create environments where people are empowered to lead its evolution. That’s what people-first consulting looks like in the age of AI.

The use case is just the beginning

One of the most common mistakes organisations make is rushing to deploy an AI tool without first building the structure to support it. A strong use case is essential, but it is only the beginning. Execution, governance, and alignment are what transform AI concepts into lasting business value.

That value comes with risk. Deploying AI in production environments without proper oversight can introduce hidden vulnerabilities - from biased outputs to security flaws or regulatory non-compliance. We break this down further in our blog: Key Questions to Ask in Navigating the Evolving US AI Regulatory Landscape. These risks scale as quickly as the models themselves. That’s why governance cannot be an afterthought; it must be embedded from the outset, with controls that evolve alongside the technology.

Every successful AI implementation relies on trust, clear ownership, and disciplined delivery. When those elements are sound, the rest follows naturally.

We help clients embed AI into the fabric of their business - not just by deploying tools, but by aligning the right use cases to strategic goals and delivering impact at scale. Our proprietary risk assessment framework ensures AI is built responsibly, with clear guardrails to navigate today’s shifting regulatory landscape.

More than focusing on what AI can do, we focus on what Baringa enables. From accelerating enterprise initiatives like Portfolio Optimisation and Cash Flow Forecasting, to piloting tactical wins such as Regulatory Horizon Scanning or Pricing Optimisation, we help clients unlock value at every stage of their AI journey. Whether the goal is efficiency, resilience, or growth, we bring the clarity, structure, and delivery experience needed to turn ambition into action.

Our Experts

Related Insights

Organisational agility: a guide to taking the first steps

Our business agility and operational excellence expert Simon Tarbett offers some advice to clients when asked the question, Where To Start?

Read more

The need for agility to grow revenue and thrive in a turbulent market

We’re looking at how organisations need to be agile if they’re to grow revenue and thrive in a turbulent market.

Read more

How to set targets to drive business performance

Targets are essential for driving better business results. Let’s discuss what companies should – and shouldn’t – be doing.

Read more

How frozen organisations can become fluid

To develop organisational agility, focus on being like water.

Read moreIs digital and AI delivering what your business needs?

Digital and AI can solve your toughest challenges and elevate your business performance. But success isn’t always straightforward. Where can you unlock opportunity? And what does it take to set the foundation for lasting success?